At Sequin, we replicate APIs to Postgres databases in real-time. We want to provide our customers with the experience of having direct, row-level access to their data from third-party platforms, like Stripe and Airtable.

After the concept of Sequin crystallized, we knew we had to get something to market quickly. But it turns out that most APIs are not designed to service real-time replication. The first API we supported – Airtable – is no exception.

Airtable's API presents some unique challenges, which I'll touch on first. Then I'll describe how we built a minimum-viable sync process that was fast and reliable enough to get us our first customers. From there, you'll see how we iteratively built and improved our sync process layer-by-layer.

Challenges

With Airtable's API:

- There's no way to figure out what's changed

- The schema can change at any time

- Throughput is low

Breaking these challenges down:

No way to figure out what's changed

There's no way around it: we have to sweep every table, all the time.

For Airtable, it's difficult to see what's changed in a base. First, you have to make requests against each individual table. Given that Airtable's API is limited to 5 requests/second, if you have more than a few tables our dream of sub-second lag time becomes difficult to attain.

Second, deletes are very common in Airtable. And yet, there's no way to easily tell from the API what's been deleted – you have to check every row.

The last curveball is Airtable's inconsistent treatment of the last_updated field for records. For example, changes to computed fields do not affect this timestamp. So, we can't poll for changes to them.

The schema can change at any time

Airtable has a flexible schema. Users can add, drop, rename, and change the type of columns at any time. This means the shape of the data we receive from the API is constantly changing.

Throughput is low

Airtable's responses will contain a maximum of 100 records. And we can only make 5 requests per second. This, of course, means we can only process a maximum of 500 records per second.

Iteratively building up our sync

We launched with the minimum viable version of our sync process. Once we had something that worked, we began to layer on sync optimizations that made the sync faster and more efficient.

Lowest-common denominator: the rebuild sync

Because the Airtable schema can change at any time and we have to sweep the full Airtable API on each sync run, the lowest-common denominator was what we call "the rebuild sync." In the rebuild sync, we perform every single operation necessary to instantiate a Postgres database and bring it to parity with the data in Airtable.

To support this process, our database is split into two schemas:

publicpublic_swap

Our customers read from the public schema. The public_swap or "swap schema" is where the sync takes place during a rebuild.

At the top of each sync cycle, we initialize all the tables in public_swap as defined by the Airtable base's current schema. We then pull all the records for each table from Airtable and insert them into the tables in the swap schema.

At the end of the sync cycle, we open up a database transaction. Inside that transaction, we drop every table in public and "promote" every table in public_swap to public.

From our customers' point of view, it looks like their public schema suddenly receives all updates from Airtable, all at once, at discrete intervals.

We decided it made sense to start with doing this rebuild every sync cycle for a couple of reasons:

- We can use the same process for syncing for the first time or the millionth time.

- Using migrations would be tricky. When the schema changes, we'd have to map out all the paths that were taken to get from schema A → schema B. We avoid this entirely by just rebuilding all the tables from scratch.

Sync to public, only rebuild on schema changes

As you might imagine, there were a few immediate problems with running a full rebuild on every sync cycle:

- We were rebuilding the tables in the swap schema and "promoting" them to public on each sync run. Promoting is an expensive operation. We had to drop all the tables in

public. This meant we were constantly marking Postgres tuples for deletion, keeping the vacuuming functionality very busy. - It increased the max lag time of a given row. This is because a row first had to get synced to the swap schema, and then wait for the rest of the rows to get synced to the swap schema before being promoted to the public schema.

So, for our database this meant high write IOPs and high network utilization. For our customers, it meant a suboptimal max lag time.

The next level of our sync was to sync directly to the public schema. To pull this off, we needed to incorporate a few changes.

First, we needed a way to upsert records from Airtable right to the public schema. Here's an example of what the upsert statement looked like:

insert into public.products (id,created_time,name,size,color)

values $1, $2, $3, $4, $5

on conflict (id) do update set

id=excluded.id, created_time=excluded.created_time, name=excluded.name, size=excluded.size, color=excluded.color

where (created_time, name, size, color) is distinct from (excluded.created_time, excluded.name, excluded.size, excluded.color)

There are a few key components of this upsert statement:

- The

on conflict (id)is what switches Postgres from performing an insert to performing an update. When updating, thesetclause is used instead. Thesetclause here is just a re-iteration of the mapping that theinsertclause makes between columns and their values. - The

where ... is distinct fromis a key clause. Without it, the upsert would perform a write operation for every single row in the upsert clause, regardless if there were any changes. This trades a write-heavy characteristic for a read-heavy characteristic, which is more efficient for Postgres. Furthermore, for customers using replication slots, this means we'll only generate a WAL entry when something has actually been created/updated.

Note that we're putting the database in charge of determining what's changed. We're constantly sending rows to Postgres, and Postgres is diffing those rows with what it has stored. If there are changes, then it performs a write.

This upsert logic lets us write directly to public. But, we still need to trigger a full rebuild if the base's schema is modified in some way (eg a table is added or a column is changed).

So, at the beginning of each sync we need to check to see if the Airtable schema has changed since our last sync. We pull the schema, hash it, then compare that hash with the hash of the last build. If the hashes are the same, we sync right to public. If the hashes are different, we kick off a full rebuild.

This sync process was a big step up from the naive first implementation. For our customers, this reduced the lag time of a given row significantly. For Postgres, we traded high write IOPs for high read IOPs, which meant our database could handle greater load. It also effectively eliminated the fraction of time a table is in a write lock, removing all kinds of weird, intermittent performance hiccups.

In-memory fingerprinting

Layer two of our sync process bought us considerably more room on our Postgres database. But there was one last major piece of low-hanging fruit.

While more optimal than the constant rebuild, the upsert statement above still places a heavy burden on the Postgres database. Every sync cycle, Postgres is given the responsibility of diffing each batch of rows we pull from Airtable with what it has stored. Read IOPs and network were both still high.

We knew it would be very beneficial to hoist this diffing work up to our workers.

Traditionally, this is the moment I'd reach for Redis. But sharing memory between worker instances is precisely where Elixir/OTP shines. We can keep a map in-memory of %{ "record_id" => "record_hash" }, where record_hash is an MD5 hash of all the key/value pairs of a given record. When we request 100 records from Airtable, we can compare their latest hashes with what we have in memory. Then, we only perform an upsert of the records that have new hashes. After Postgres confirms the write succeeded, we write the latest hashes to our in-memory cache.

The algorithm to employ this in-memory hash is pretty straightforward:

- Hash the incoming records we just pulled from Airtable

- Diff the incoming hashes with the hashes we have in-memory

- Upsert all records that have changed into Postgres

- Update the in-memory hash

By keeping the upsert from our second layer intact, we're resilient to failures in this pipeline. If eg the update step in 4 fails, no big deal, those records will just be re-upserted on the next sync, meaning just a little more work for Postgres. If we're unable to upsert to Postgres (eg Postgres is down), we don't update our in-memory hash, so try again to insert on the next go around.

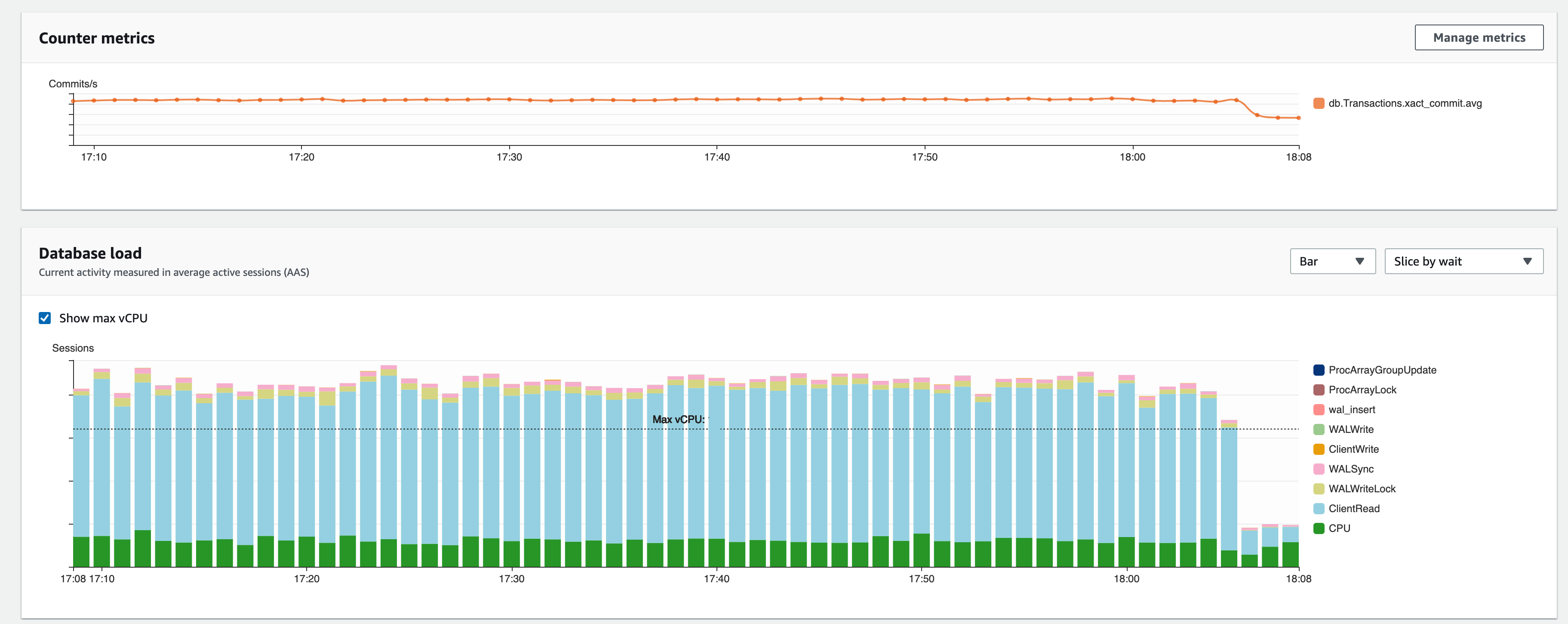

This particular improvement had a massive impact. Our read IOPs and network utilization each dropped by over 90%:

There was an uptick in load on our workers, as they were now doing all the diffing. But this was more than offset by the reduction in time they spent waiting for Postgres.

Support read-after-writes with a proxy

At this point, our sync is efficient and we're able to approach the theoretical replication limit given the limitations of the Airtable API.

However, we felt ourselves missing an answer for writes. We believe in a one-way data flow: reads are best made in SQL, but for writes you'll want to make an API request so you can properly handle eg validation errors. But, even if the replication lag is only a few seconds, any code that performs a read-after-write will not work.

For example, we have some customers that are powering their React web applications with an Airtable back-end. Let's say they have a workflow to let users update their email address. The user clicks an "Edit profile" button, and is prompted for their new email address. After updating, they're sent back to their profile page.

Here's the problem: when they save their new email address, that triggers an API PATCH request to Airtable on behalf of that user. But there's a race condition: when the user is redirected back to his or her profile page, the React app re-fetches their user record from Postgres. There's no guarantee, though, that the updated user record (with the new email) has propagated via Sequin to their Postgres database. So the React app grabs and displays the stale email, confusing the user.

So we built a proxy that users can write through to Airtable. Functionally, it's a reverse proxy. You just prepend the proxy's hostname to whatever Airtable API request you want to make, eg:

PATCH https://proxy.sequin.io/api.airtable.com/v0/appF0qbTS1QiA025N/Users

The proxy attempts to write the change to Airtable first. If the Airtable write succeeds, the change is written to the database and then the requestor receives a 200. This means that the change that was just written is guaranteed to be present in any subsequent database read.

Layer by layer

Our wisest move was to build the initial sync to serve the lowest common denominator, even if it was slow and inefficient. As long as we were reliable, delivered on the replication time we estimated in our console, and were resilient to eg schema changes, our customers were happy.

Reflecting back on our discussions before launch, a lot of our original assumptions about what our sync would need were wrong. Luckily, we time-boxed the initial build and got our MVP sync (the rebuild sync) to market as fast as possible. From there, servicing customers and learning about their needs helped inform the shape of each subsequent layer and its priority. Notice how we slowly layered on performance optimizations:

- Layer 1

- The rebuild sync: High write IOPs, high network, and high vacuuming.

- Layer 2

- Sync to

public: We first reduced vacuuming by syncing directly topublicand keeping tables between syncs. - Then we added

where ... is distinct from. This traded high write IOPs for high read IOPs and got rid of our vacuuming problem. We still had high network.

- Sync to

- Layer 3

- Fingerprinting: By hoisting the diffing logic up to our workers, we solved both read IOPs and network on our database.