We’re Sequin. We stream data from services like Salesforce, Stripe, and AWS to messaging systems like Kafka and databases like Postgres. It’s the fastest way to build high-performance integrations you don’t need to worry about.

Most APIs get the basics of listing right. API designers know that paging from top-to-bottom is important, so there's usually a way to do it. But few seem to consider why the consumer is paging in the first place.

The most common need when polling and paginating is finding out what's changed in the API. Consumers need to find out what's changed in order to trigger events in their system. Or to update a local cache or copy that they have of the upstream API's data.

I'll call this usage pattern consuming changes.

Webhooks are supposed to help notify consumers of changes. But they have limitations:

- Webhooks do not guarantee delivery from a majority of providers.

- Many providers only support a subset of all possible updates via webhooks.

- You can't be down when a webhook is sent.

- You can't replay webhooks. If you store only a subset of data from a provider and change your mind later, you can't use webhooks to "backfill" holes in your data.

I discussed those limitations here. In that article, I advocated for using an /events endpoint whenever possible. An /events endpoint lists all creates, updates, and deletes in your system. An /events endpoint has almost all the same benefits of webhooks with none of the drawbacks.

But many APIs don't support webhooks and most don't have an /events endpoint.

You don't need either of these to have a great API. But you do need a way to consume changes to have a great API, because that's one of the most common usage patterns.

There aren't a lot of APIs that support consuming changes well. In this article, I'll propose a solution that's easy to add to an existing API. I'll call it the /updated endpoint, though it can be adapted to existing list endpoints. While there are a few ways to design an endpoint like this, I hope the specifics of my proposal will make clear what's required to make consuming changes easy and robust.

Proposal

To consume changes, consumers will need to paginate through a list endpoint. But cursors, pagination, and ordering are easy to get wrong. A good design helps minimize mistakes.

For the best aesthetics, I'd recommend having a dedicated endpoint for consuming changes, like this:

// for the subscriptions table in your API

GET /subscriptions/updated

Or a unified endpoint for all objects like this:

GET /updated?object=subscription

This endpoint sorts records by the last time they were updated. The endpoint should have one required parameter, updatedAfter, and one optional parameter, afterId. The combination of the two of these parameters is your consumer's cursor. The cursor is how a consumer paginates through this endpoint. I'll discuss pagination more in a moment.

To give you an idea of how these parameters would work behind the scenes, it might generate a SQL query that looks like this:

select * from subscriptions

where (

updatedAt == {{updatedAfter}}

and id > {{afterId}}

) OR updatedAt > {{updatedAfter}}

order by updatedAt,id asc

limit 100;

The cursor for the next page is embedded in every response: the updatedAt and id of the last record in the page before.

The cursor and its associated SQL query are designed to follow the golden rule of pagination: records can not be omitted if a consumer requests all pages in the stream in sequence. Duplicating records across pagination requests is also undesirable for many applications, so we account for that as well.

Many APIs have flaws in their design for paginating updated records. The benefits of this design:

- Record IDs provide a stable ordering when two or more records have the same

updatedAttimestamp. - You will not omit or duplicate objects in subsequent responses.

- Your consumers can initialize their cursors wherever in the stream they'd like. For example, let's say they want to start with everything updated starting after today. They'd set their cursor to

updatedAfter=now()and get to work. - Likewise, it makes "replaying" records a cinch.

Let's break this design down by considering its parts:

Why both afterId and updatedAfter?

You want both cursors for two reasons. Let's consider what happens if you use only updatedAfter without afterId.

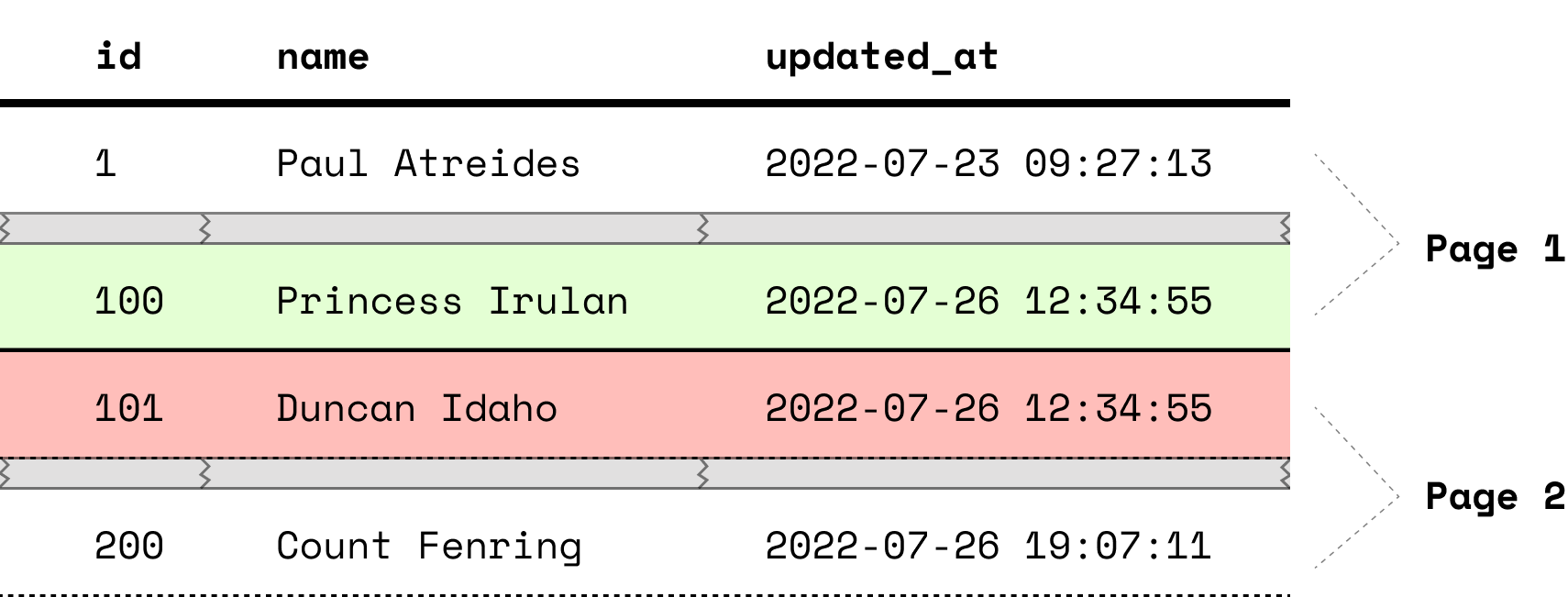

If you use only updatedAfter > {{updatedAfter}}, you risk omitting records during pagination. Imagine that two records are created or updated at the same time. Your API returns 100 records per request. One of these twin records is the 100th item in one request and the other is supposed to be the 1st in the next request:

After the first request, the consumer sets the updatedAfter cursor to that timestamp for the next request. But if the next request uses >, your consumer will never see the other record. This is an omission error.

To remedy, you might be tempted to use updatedAfter >= {{updatedAfter}}. But this introduces two problems.

First, the first record in each response will be the same as the last record in the previous response. This is a duplication error! The cursor afterId addresses the duplication error when using >=.

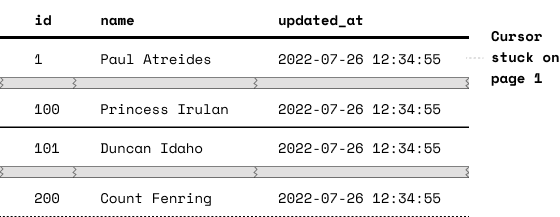

Second, consider what will happen with >= in a situation where a lot of records are created or updated at the same time. This can happen if the database is populated with a bulk-import or mass-updated (e.g. in a migration). Now, our pagination can get stuck. If we return 100 records that all have the same updatedAt to the user, they'll use that same updatedAt in their next request. We'll return the same 100 records:

We choose id as the secondary sorting parameter as the id is stable. We need something that will be stable between requests to avoid duplication or omission errors. We can confidently navigate a stream of 1000s of records that all have the same updatedAt so long as we have id to help us page through. (Another candidate is createdAt, but it's more confusing than using id.)

We can't just use id like this however:

updated_at >= {{updatedAfter}}

and id > {{afterId}}

This can cause an omission error in the following situation:

- A record is updated

- Consumer makes a request, grabs that update

- The record is updated again, before any other record is updated in that same table

Because the next request will be using the afterId of that record, the consumer will miss the latest update for it.

So that's why we only use the afterId filter in the specific situation that two records have the same updatedAt:

where (

updatedAt == {{updatedAfter}}

and id > {{afterId}}

) OR updatedAt > {{updatedAfter}}

Why is afterId optional?

The only reason is to make cursor initialization easier. Your consumer will decide where in the stream they want to get started (after which timestamp.) After they make their first request, they'll construct a complete cursor pair (afterId and updatedAfter) from the last record on that page.

One weakness is that this design leaves open a foot-gun where consumers can forget to use afterId after initialization. There may be some creative ways around this, like having a lower ceiling for a limit parameter when afterId is not present.

Why ascending and not descending?

The inverse of an after cursor is a before. Could we not design a system where consumers start at the tail end? They could use beforeUpdatedAt and beforeId to traverse the stream, backwards.

You can, but I think it's clumsier and makes it easier for your consumers to make a mistake.

Fundamentally, a record's updatedAt timestamp can only go up (ascend) yet you're consuming the stream in the opposite direction (descending).

When consuming in the ascending direction, the end is when you hit now() or there are no objects after your current updatedAt. When consuming in the descending direction, the consumer has to know when to stop. The stopping point is when you reach an updatedAt and id that is <= the first record you grabbed during your last pagination run. This means on top of storing cursors, your consumers have to store and manage this state. Confusing.

With the updatedAfter and afterId system, luckily you don't have to page through the whole history to get started. The consumer can initialize updatedAfter wherever they want and go from there.

Why use cursors at all? Why not just use pagination tokens?

Instead of having your consumers manage the cursors, you might consider using a pagination token. With a pagination token, your response includes a string the consumer can use to grab the next page of results.

They might call GET /orders/updated once with an updatedAfter cursor. Then all subsequent requests could use a pagination token. If a result set is empty (they've reached the end of the stream), you could still return a token. They'd use this token to check back in a moment to see if anything new has appeared. So, after the initial request with updatedAfter to initialize the stream, they would use tokens from that point forward.

You could easily make a pagination token by base64'ing a concatenation of the afterId and updatedAfter. That means the cursor is stateless and will never expire.

The biggest advantage I see is that it removes the issue where consumers could forget to send the afterId along with the updatedAfter in subsequent requests. It gives them just one string to manage. You return that string in your request to encourage them to use it from then on.

The disadvantage is that it's more opaque. It's harder for consumers to understand where they are in the stream just by looking at the cursor (is this sync a minute behind or a day behind?) Properties about the pagination token are not apparent on its face: does this token last forever? Does it account for errors of omission or duplication? Using afterId and updatedAfter will feel both robust and understandable to the consumer.

Taking /updated even further

Unified endpoint

You might consider one /updated endpoint that lists updated records across all your tables. For consumers that have a lower volume of updates to process, this would benefit both you and them: they can request one endpoint to find out what's changed as opposed to requesting each endpoint individually.

The one drawback is that for consumers with a high volume of updates to process, they can't process those updates in parallel. This is because you have a single cursor for a single stream.

A remedy is to have a single /updated endpoint but allow for filtering by a record's type. This will satisfy lower-volume consumers while allowing for parallel processing by high-volume consumers.

Deletes

One drawback of relying on just an /updated endpoint for consumers to list changes is that it won't list deletes. Consider adding a dedicated endpoint, /deleted. Or, if deletes are important to your consumers, you might want to invest in a general event stream like /events.

Client libraries

Wrap all this pagination business into friendly client libraries :) Not every consumer will reach for a client library, which is why it's important your API have good standalone aesthetics. But for the ones that do, you can present this rock-solid pagination system in an interface native to your consumer's programming language.